The Language Resources and Evaluation Conference (LREC) is a keystone event for Machine Learning. AMPLYFI’s Head of Machine Learning shares his experience at the event, the papers he was involved in that were presented at the conference, and a few other papers he found to be exciting developments in the field.

Luis Espinosa-Anke is AMPLYFI’s Head of Machine Learning and a lecturer at the School of Computer Science and Informatics at Cardiff University. He received his PhD from Universitat Pompeu Fabra (Barcelona, Spain) in 2017. His interests include machine learning and natural language processing.

At AMPLYFI we carefully review the quality of our datasets: it is important to understand the quality of the data that our ML solutions are trained on, whether we have good models based on how well they do on a test set, as well as potential biases and limitations. In fact, data is at the core of all machine learning (ML). However, most ML conferences and journals focus on the technical aspects of ML, for example a new algorithm, a new use case, or analysing the behaviour of an existing algorithm in a different setting. However, the development of datasets is in itself a very important aspect in ML, especially in natural language processing (NLP), where document collections can also tell us a lot about a certain demographic group, bias in a subsequent ML model, or the challenges in some areas like chatbots, machine translation or web search. Also, we should not forget that there are many languages for which high quality datasets simply do not exist.

This is why The Language Resources and Evaluation Conference (LREC), which was held this June in Marseille (France), is so important for NLP. It is a forum dedicated to developing language resources, which is another word for almost anything that is not a new model (a new piece of software, an annotated collection of documents, or even a set of guidelines for labeling data). In this post, I will summarise, first, the papers I was involved in that were presented at the conference, and second, a few other papers that I found to be particularly exciting. For example, a tool for annotating word senses, semantic roles and abstract meaning representations all in one go, or a dataset for the nuclear domain (read how we used AMPLYFI’s tech to explore the dynamics of the energy sector).

Sentence Selection Strategies for Distilling Word Embeddings from BERT

Yixiao Wang, Zied Bouraoui, Luis Espinosa-Anke, and Steven Schockaert

Luis with Yixiao Wang, PhD student at Cardiff University and the paper’s first author

In this paper, we propose to explore different strategies for learning static word embeddings from BERT (one of Google’s most successful machine learning architectures for language understanding). While contextual representations are a standard now, sometimes we simply don’t have enough text context (in a search query or in a knowledge graph). We found that it is possible to learn good word embeddings from BERT selecting sentences that contain words that co-occur often with them, and definitions.

XLM-T: Multilingual Language Models in Twitter for Sentiment Analysis and Beyond

Francesco Barbieri, Luis Espinosa-Anke and Jose Camacho-Collados

Discussing the challenges of pretraining Twitter-based multilingual language models

In this paper, we introduce XLM-T, a multilingual language model specialized on Twitter. We also release a unified sentiment analysis dataset, in 8 languages, all available at the official repository and the Huggingface model hub. Below you can see the different languages the model was pre-trained on.

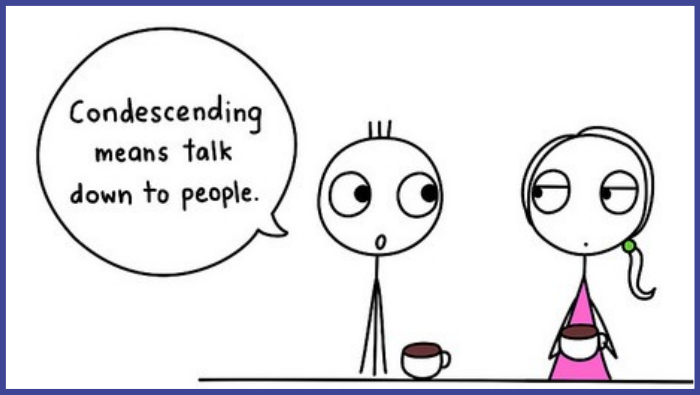

Pre-Training Language Models for Identifying Patronizing and Condescending Language: An Analysis

Carla Perez-Almendros, Luis Espinosa-Anke and Steven Schockaert

Detecting patronizing and condescending language against vulnerable communities is a particularly challenging problem, as this type of language, while harmful, is often meant with good intentions, and thus it is more subtle than other harmful content like offensive language or hate speech. In this paper, we explore what happens when you fine-tune a BERT-based classifier on an auxiliary task first, and find that training the model to detect ironic or morally acceptable statements is beneficial. It turns out that when someone is condescending, they use hyperboles and strongly opinionated language, which is also typical in ironic situations.

And these are three awesome papers you should check out too!

Universal Semantic Annotator: the First Unified API for WSD, SRL and Semantic Parsing

Riccardo Orlando, Simone Conia, Stefano Faralli and Roberto Navigli

In this paper, the authors present a framework for performing semantic annotations on text: word sense disambiguation, semantic role labeling, and semantic parsing.

Few-Shot Learning for Argument Aspects of the Nuclear Energy Debate

Lena Jurkschat, Gregor Wiedemann, Maximilian Heinrich, Mattes Ruckdeschel and Sunna Torge

This is a carefully annotated dataset in the Nuclear Energy domain, broken down at the sentence level, and tagged with aspect annotations such as costs, annotations or weapons.

Applying Automatic Text Summarization for Fake News Detection

Philipp Hartl and Udo Kruschwitz

This paper explores combining different input signals with free text for the task of fake news detection. The authors inject textual metadata and numerical features to news articles’ titles and text bodies. The concatenation of all features are then passed to a two-layer feed-forward network for classification. They also explore using an off-the-shelf automatic summarizer, and show that while performance degrades a little bit with automatically generated summaries, the performance is still competitive with respect to the full models.